Failing (and learning) well

Being evaluated is something that requires intestinal fortitude.

Why, you ask? Because it requires the courage to ask the question: is my work any good? Is this thing I’ve invested my time, money—and quite possibly my heart into—any good at all?

A little over a year ago, I piloted an online training platform for new and novice evaluators. The platform trialed a new model for training evaluators by embedding repeated opportunities to practice evaluation-related tasks and then receive immediate feedback on performance.

I loved my idea. I’d spent months doing the background research, collecting real-world scenarios from expert evaluators, and fine tuning the learning experience. People expressed interest, they signed up, we got everything ready.

And then: we launched.

Let me tell you, that was a new experience. What were my participants doing? What were they thinking? Why weren’t they logging in at 1am in the morning? Did they hate it? Were the practice activities too hard so people were discouraged? Were they too easy so everyone was bored? This offered up a whole host of insights about what it might be like to be on the receiving end of an evaluator’s work.

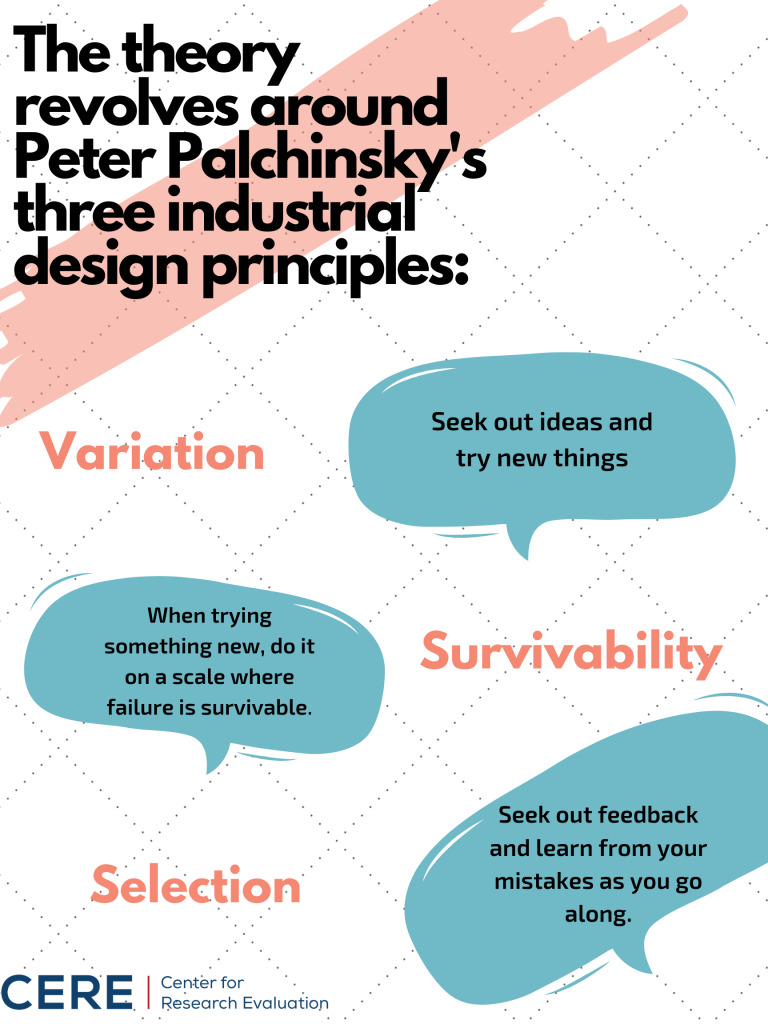

The Palchinsky Principles

At the time, I was reading Tim Harford’s book Adapt: Why Success Always Starts With Failure. One of the references that struck me, and has continued to resonate since, was Harford’s discussion about the Palchinsky Principles: three principles for helping people fail well.

Peter Palchinsky was a Russian engineer who developed these three principles when he saw large-scale Russian infrastructure projects repeatedly fail. The principles were intended as a way to walk people through the process of failing well—so that their hard work ultimately led to success.

Palchinsky said that to fail well, we need to (1) try out lots of different ideas—not just put all our eggs in one single idea basket, (2) test them on a small-scale so that if we fail, consequences are not disastrous, and (3) make sure we embed quick feedback loops so that if we fail, we will find out quickly and can cut our losses early on.

What does this mean for evaluators—and people BEING evaluated?

At the time, reading this made me kick myself for only trialing one iteration of the idea and not many.

But twelve months on, it also makes me wonder whether and how program designers, implementers, evaluators—and funders—might embed these principles into our work.

For example: how might we support opportunities to test multiple potential solutions on a small scale? How might we openly and explicitly build the expectation that some of these WILL fail as we figure out solutions to complex problems? How can we make it quick and easy for programs to generate fast feedback loops so we can sort out which ideas are working and which ones are not?

If you would like to experiment with ideas to embed the Palchinsky Principles into your work (or you already are), we’d love to hear from you.