Metric-ulating (how to work with and around metrics)

The donor community is abuzz with talk about metrics these days.

- “Applicants must include a list of baseline metrics for assessing program impact” (says many-a request for proposals).

- “Let’s get together and talk about which metrics we should measure! We want to make sure we can confidently talk about our impact” (says your friendly program director or CEO).

On the face of it, this all sounds reasonable. Metrics measure pre-determined characteristics (such as reach, quality, quantity, or impact) that signify elements of program quality and effectiveness. Who doesn’t want that?

Metrics have their place, for sure. Problem is, they’re often asked to play a role well outside their job description.

Consider this.

You work on an afterschool program that provides literacy tutoring to kindergarten-age students who need extra help with reading. You—like many others—have to include a list of metrics for measuring impact in your grant application.

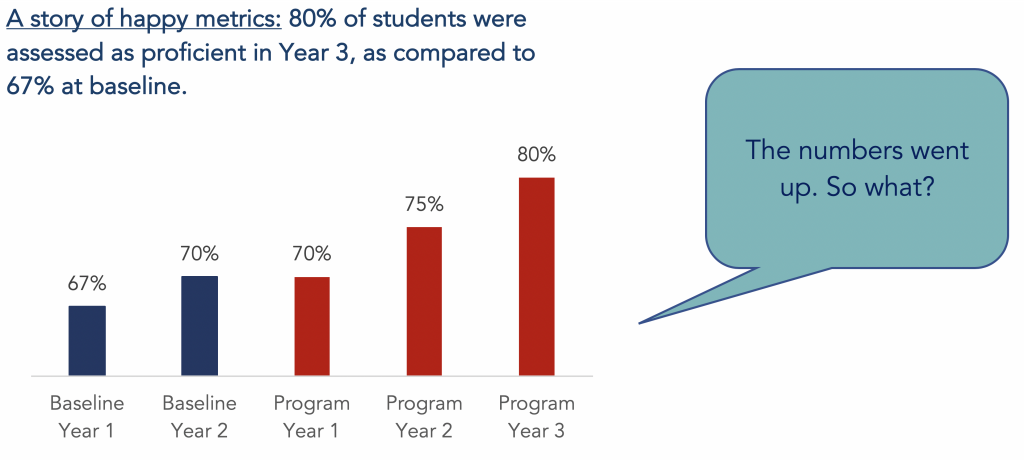

This all sounds reasonable, so you put together a set of literacy-related metrics (you want to make sure they’re outcome focused!), including the percentage of students proficient on a standardized kindergarten readiness measure at the end of the school year. You record your baseline measures (67% and 70% respectively for the two years before you begin your program) in the grant application, and then diligently report on these figures throughout the life of your program:

- 70% proficient in Year 1,

- 75% proficient in Year 2,

- 80% proficient in Year 3.

Evaluation job done.

What’s the problem?

What’s the problem?

Embedded in the call for metrics—particularly those related to value and impact—are a set of three, usually implicit questions:

- Question 1: Did this measure improve over the life of the project?

- Question 2: (this is the implicitly causal question) Did my program contribute to improvements in this metric?

- Question 3: (this is the implicit value question) Is this program any good?

Ignoring for the moment Campbell’s Law (i.e., the more a quantitative social indicator is used, the more it will be subject to corruption), even if a carefully measured metric is helpful in answering Question 1, it is woefully ill-equipped to answer Questions 2 and 3.

Why don’t metrics help answer causal questions?

Metrics offer up a series of numbers (e.g., 67, 70, 70, 75, 80). This is great, the world loves numbers.

But, genuine answers to Question 2 require attention to logic—not just numbers. More specifically, they require attention to the logic of causality—a chain of reasoning that allows us to confidently, and sensibly, talk about whether X (our literacy support program) contributed to Y (our literacy scores). Numbers without reasoning are just scratches on a page; it’s the reasoning behind the numbers that help us form useful conclusions.

Say we get to the end of our literacy program, and our diligent program director reports back to her donors: 67% of students were proficient during the baseline year. 80% were proficient in year 3. A success for learning all round! Except our diligent program director finds that she struggles to convince people her program actually had an impact. Even though she did all she was asked—she reported on her metrics—her claims about impact will be undermined by persistent questions like How do you know the increase is because of your program? What would have happened without the afterschool program (would the numbers still have gone up?) Was there anything else, beyond the afterschool program, that caused the numbers to go up?

Making implicit questions explicit

Once we recognize the implicit questions embedded in calls for metrics we can supplement their use with evaluation approaches that actually target the questions that lie at their core: the implicit causal question and the implicit value question.

Causal questions are better served through a set of carefully chosen research/evaluation designs that target the underlying premises of (and threats to) causal conclusions.

Similarly, value questions (e.g., Question 3) are better served through careful application of the logic of evaluation. Numbers alone won’t tell you if your program is any good; careful exploration of the criteria and standards that define ‘good’, along with data and evaluative logic on the other hand, can.

What to do?

So: the next time you’re asked to provide a set of metrics, stop and take a breath. Ask yourself what lies beneath the call for metrics. Task yourself with uncovering the implicit questions embedded in the request for metrics. Once you’ve made these implicit questions explicit, the evaluation world is your oyster and you can design an evaluation approach to answer these questions, and get you the information you need.